In the last couple of years artificial neural networks (ANN) achieved new state-of-the-art results in a multitude of research areas. Their data-driven training process usually only requires very little in-depth domain knowledge. However, a successful training demands experience and knowledge with respect to ANNs and their design as well as optimization, in other words, an expensive hyperparameter optimization. In a recent trend, research is conducted to facilitate and automate various aspects of this process. In particular, the automatization via Reinforcement Learning is becoming increasingly popular. To this end, we will introduce the most common state-of-the-art RL methods and discuss their application to a selection of modern AutoML papers:

- Learn a gradient descent optimizer

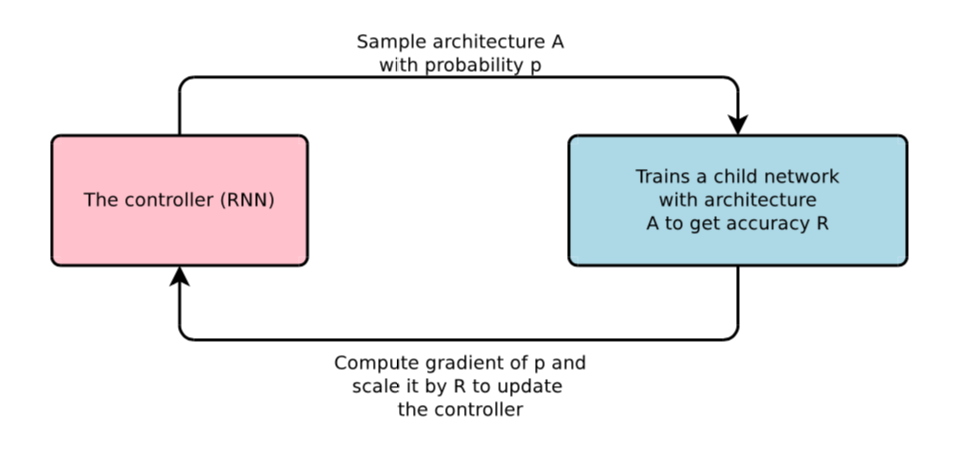

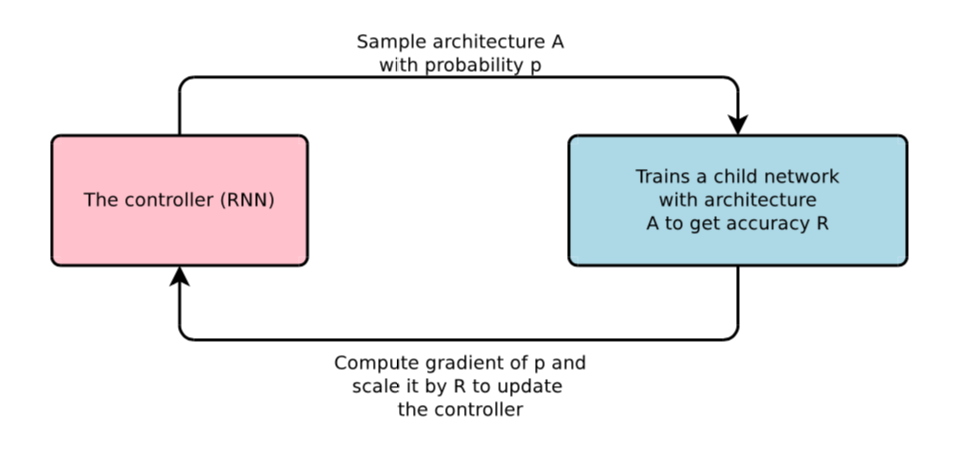

- Neural architecture search (NAS)

- Searching for activation functions

- Learning data augmentation and batching

- …

Thursdays (14:00-16:00) - Room 02.09.023

Lecturers: Prof. Dr. Laura Leal-Taixé and Tim Meinhardt.

ECTS: 5

SWS: 2

Prerequisites

- Introduction to Deep Learning (I2DL) (IN2346)

- Previous knowledge in Reinforcement Learning preferred.

Papers

- Proximal Policy Optimization Algorithms. Schulman et al. (2017, July 19).

- Asynchronous Methods for Deep Reinforcement Learning. Mnih et al. (2016, February 4).

- AutoAugment: Learning Augmentation Policies from Data. Cubuk et al. (2018, May 24).

- Learning What Data to Learn. Fan et al. (2017, February 28).

- Neural Architecture Search with Reinforcement Learning. Zoph et al. (2016, November 5).

- Learning Transferable Architectures for Scalable Image Recognition. Zoph et al. (2017, July 21).

- DARTS: Differentiable Architecture Search. Liu et al. (2018, June 23).

- Searching for Activation Functions. Ramachandran et al. (2017, October 16).

- Learning to learn by gradient descent by gradient descent. Andrychowicz et al. (2016, June 14).

- Learning to Optimize. Li et al. (2016, June 6).

- Learning step size controllers for robust neural network training. Daniel et al. (2016).

- Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. Finn et al. (2017, March 9).

Please feel free to suggest your preferred AutoML paper.

Schedule

- 25.01 (13.00 - 15.00) - Pre-course meeting (slides)

- 25.04 (14.00 - 16.00) - Introduction and paper matching

- 16.05 (14.00 - 16.00) - 1. + 2.

- 23.05 (14.00 - 16.00) - 3. + 4.

- 06.06 (14.00 - 16.00) - 5. + 6.

- 04.07 (14.00 - 16.00) - 7. + 8.

- 11.07 (14.00 - 16.00) - 9. + 10.

- 18.07 (14.00 - 16.00) - 11. + 12.